The Need for Speed: How We Reduced Service Latency by 98%

An overview of the steps we took to bring down the latency in our in-house experimentation platform.

By Rajendra Rusmana

Have you heard of Litmus? It’s Gojek’s own experimentation platform. In a nutshell, the service can return a list of experiments assigned to a particular customer. This allows either the mobile app or another backend service to tailor some customers’ experience differently, according to the experiment’s design. This gives Gojek product teams a lot of value in iterating product development and promoting growth. However, there is another side to this equation: increased latency.

By asking product teams to adopt Litmus in their flow, we are in effect asking them to add yet another source of latency in their service flow. An extra call to Litmus needed to be made on top of the existing processes and calls they are already making now, so for us it is important that this extra latency be as low as possible.

In this post, we explain how we achieved this objective.

Hunting down latency

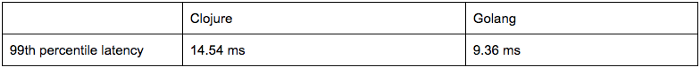

Our first step was to build a co-located Litmus subset that can be deployed alongside the backend service that would be calling it — thus removing the need for network hops. This subset was built in Golang (the full Litmus service is built in Clojure) because when we compared basic implementations in Golang and Clojure, the Golang one was faster.

How are we doing performance testing and profiling? Since our API is a GRPC one, we are using Ghz. You basically need to specify the endpoint, the .proto file, the payload, and test parameters. Ghz will run the test and give you a pretty output detailing the overall and percentiles of latency.

Summary:

Count: 10000

Total: 50.01 s

Slowest: 20.55 ms

Fastest: 1.90 ms

Average: 6.15 ms

Requests/sec: 199.96

Response time histogram:

1.895 [1] |

3.761 [937] |∎∎∎∎∎∎∎∎∎∎

5.626 [3479] |∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎

7.492 [3893] |∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎

9.358 [942] |∎∎∎∎∎∎∎∎∎∎

11.223 [233] |∎∎

13.089 [291] |∎∎∎

14.954 [160] |∎∎

16.820 [52] |∎

18.686 [10] |

20.551 [2] |

Latency distribution:

10% in 3.80 ms

25% in 4.56 ms

50% in 5.87 ms

75% in 6.99 ms

90% in 8.33 ms

95% in 11.33 ms

99% in 14.22 ms

Status code distribution:

[OK] 10000 responses

CPU Usage 50th Quantile 13.710496

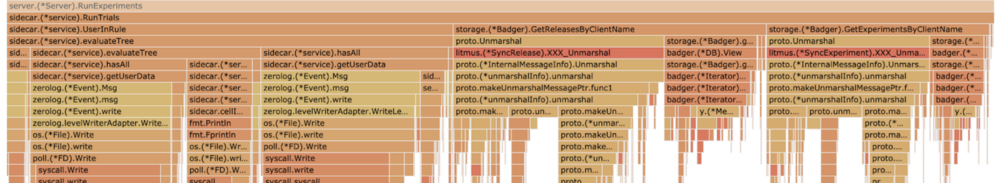

Memory Usage 50th Quantile 521.535488 MBAfter we get our latency numbers, we need to do some profiling to see which part of our code is taking the most time. This can be done using pprof tool for golang programs. (You can find out more about profiling with pprof here). Pprof offers several output formats but the most useful for profiling is the flame graph. It shows you what methods are being run and how long (as a percentage of caller runtime) each method takes. The longer the bar is, the larger the percentage of caller runtime is taken up by that method — so you can focus on the longest-running methods first.

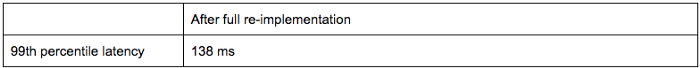

Once we picked our implementation language, we were hard at work re-implementing the rule engine that made sophisticated experiment rules possible (e.g. only give out an experiment when the customer is at a specific location). Then we redid the performance testing to test how the system handles the load and concurrent requests. To our surprise, the latency increased sharply.

Our target latency was 5 ms. This was unacceptable.

We did a profiling run with pprof to look for the culprit that made the latency figures soar. A rule of thumb when looking for sources of latency in your code hot path is that you need to look at anything that involves access to either disk or a networked resource. Below is an example of a flame graph generated by our profiling.

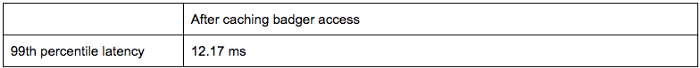

On our first pass, we found that our chosen database was the problem. To store experiment definitions we are using BadgerDB. Badger is a persistent, embedded KV store based on LSM trees that are optimised for SSD access patterns. As KV stores go it is quite fast but it is still accessing the disk. Badger did not have a built-in cache, so we implemented a cache above it so that we don’t always read from disk. This reduced our latency significantly.

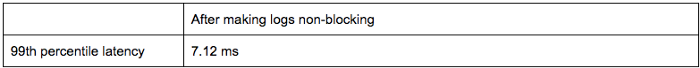

We were definitely on the right track here but the number is still above our target. We then looked for other candidates for further optimisation. It turned out that logging statements on the critical code path were also significant contributors. We are using the zerolog library which is a zero-allocation logging library. Although according to benchmarks this library is already quite fast — we have to remember that it still depends on what you write the logs to. In our case, we were writing to stdout and the benchmark was writing to ioutil.Discard. The latency of writing to stdout was slowing down our critical path.

So we proceeded to remove all logging…

Just kidding! 😅

We still need them to figure out what’s going on, especially when something’s wrong. However, we don’t necessarily need the writes to happen immediately and block the rest of the code from executing. The zerolog readme suggested that we could use the Diode library to make our writes non-blocking. Diode is a ring buffer that would store our logs until a goroutine can do the writes outside of the main thread. If the buffer gets filled up, however, it will overwrite the oldest item in the buffer so some messages might get dropped. Zerolog enables us to specify a function that would be run if this happens. To handle this, we can gauge the number of dropped messages using statsd and then adjust the buffer size or polling interval to avoid too many dropped messages. Through this improvement we were able to again reduce our latency:

This is still more than our target, but now it is already at 2% of what we had before. We can theoretically improve this further by reexamining our logs and log levels, only keeping what is absolutely critical if it turns out we do have to reach that target.

In the meantime, I want to ask you a question, does this kind of problem excite you? Are you itching to profile and optimise a bunch of Golang microservices? Help us to improve our experimentation platform and join our team! Check Gojek’s Career Page for further info.

Also, want to see our stories in your inbox? Sign up for our newsletter!