The Benefits of Partitions in PostgreSQL

How we use partitions in PostgreSQL, and the hiccups we faced on the way to improved system stability.

By Deepshi Garg

At Gojek, we continuously reassess, redesign, and rework our systems. One such system we recently worked upon is the one which stores and serves active bookings for customers.

Why the rework? Read this post for more details:

Our customer application uses this backend to show active bookings for a customer. If a customer initiates a booking, but (due to some delay or failure in our system) is not able to see it on the app, they might initiate another one. This would lead to multiple duplicate bookings, drivers being allocated to them, and a higher rate of cancellations. Thus, this system needed to be highly available and consistent.

In Gojek, over 3 million bookings are initiated in a day. A booking remains active for at most a week. This meant we needed our system to be able to handle large volumes of new bookings every day, each one ready to be served for at least a week.

Agreed! Thats not much data compared to our other systems. But high availability and consistency was a crucial requirement here.

Thus, we used PostgreSQL 10.5. Our schema looked something like

CREATE TABLE IF NOT EXISTS bookings (

booking_number PRIMARY KEY,

customer_id,

booking_details,

...

...

and so on

) CREATE INDEX ON (customer_id)booking_numberhas to be the Primary Key for there should be only one entry per booking- Index on

customer_idis needed in order to fetch all the active bookings for a customer.

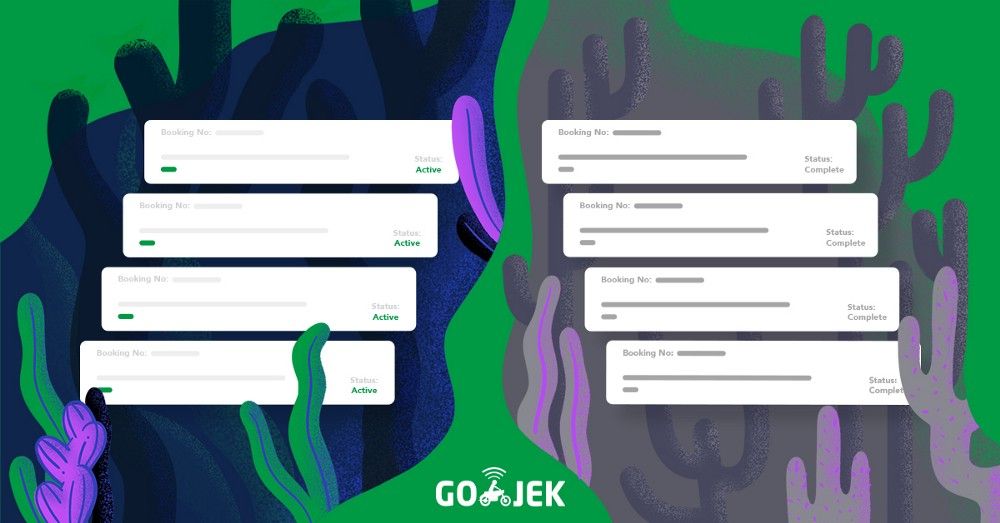

Since a booking is no longer useful once it is completed, we needed this schema to facilitate periodic cleanups. We now had 2 options :

- Delete a booking from the DB as and when it is completed.

- Partition the table with weekly timeframes, and delete the whole partition once all the bookings within it are completed.

Option 1 did not seem very viable because tuples that are deleted are not physically removed from the table.

- It would lead to bloated table sizes because of increased dead tuples. This means we would need larger amounts of disk space even with limited data.

- Also, frequent vacuum processes would be needed to clean these tuples. Vacuum slows down the DB response. Since active bookings are critical for customers, we could not afford this.

So we chose table partitioning.

PostgreSQL 10 provides native partitioning. We put up a scheduler to create and archive weekly partitions. Our schema now looked like this :

CREATE TABLE bookings (

booking_number PRIMARY KEY,

customer_id,

booking_details,

created_at_timestamp,

...

...

and so on

) PARTITION BY RANGE(created_at_timestamp)The functions to create and archive partitions were as follows :

CREATE FUNCTION create_weekly_partition(total_weeks) RETURNS void AS $$

BEGIN

FOR i IN 1..total_weeks LOOP

end_date = current_date + 7;

EXECUTE CREATE TABLE partition_i PARTITION OF bookings

FOR VALUES FROM (start_date) TO (end_date)

EXECUTE CREATE INDEX idx ON partition_i (customer_id);

start_date = start_date + 7;

END LOOP;

END;

$$ LANGUAGE PLPGSQL;

_____________________________________________________________

CREATE FUNCTION archive_weekly_partition(weeks_to_be_deleted) RETURNS void as $$

BEGIN

FOR i IN 0..weeks_to_be_deleted LOOP

EXECUTE DROP TABLE partition_i

END LOOP;

END;

$$ LANGUAGE PLPGSQL;total_weeks: Number of weekly partition tables to be created for future.weeks_to_be_deleted: Number of weekly partitions to be archived from the past- Yes, we need to define the index independently for each partition table. We cannot define it in the parent table.

This looked awesome 😎. It looked like we had cracked it. We only needed to store two of the weekly partition tables. This was a memory-efficient and reliable solution for our requirements of a consistent and available system.

But there was a storm coming 😅. One fine night, our archive_weekly_partitions job got stuck.But that shouldn’t be an issue right? It would only affect the table to be archived. All other tables should be available and queries should be served fine.

This is where we got it wrong.

DROP TABLEacquiresACCESS EXCLUSIVElock for themastertable and not the individual child tables. This means it locked our wholebookingstable, making all the active bookings unresponsive 🤦♀

The Solution

This had to be fixed. We went through documentation and blogs again. It was discovered that we should detach a partition from the master table before running any long queries. Hence, if this child table needs to acquire a lock, other children won’t be affected. Also, if needed, we can reattach it. Giving lock to the partition to be archived was fine, because we are not going to read from it anyway.

Thus, our new archive_weekly_partitions function looks like :

CREATE FUNCTION archive_weekly_partition(weeks_to_be_deleted) RETURNS void as $$

BEGIN

FOR i IN 0..weeks_to_be_deleted LOOP

EXECUTE ALTER TABLE orders DETACH PARTITION partition_i

EXECUTE DROP TABLE partition_i

END LOOP;

END;

$$ LANGUAGE PLPGSQL;Problem Solved. Everything runs smoothly now 🎊

Results:

We are currently serving a throughput of 120k requests per minute with a response time of 0.5 milliseconds from Postgres. 😎

Data gets archived and new partitions are getting created periodically without any complicated app-level partitioning logic or the integration of any external library.

Hope you found some useful information in this post. To have more stories like this delivered to your inbox, sign up for our newsletter! 🙌