Sharding 101: The Ways of Weaver

A guide to deploying GOJEK’s open-source reverse proxy to Kubernetes and using it to shard incoming requests.

By Gowtham Sai

In a previous post, we had announced Weaver, GOJEK’s in-house reverse proxy (which we have open-sourced 🖖).

You can read it here:

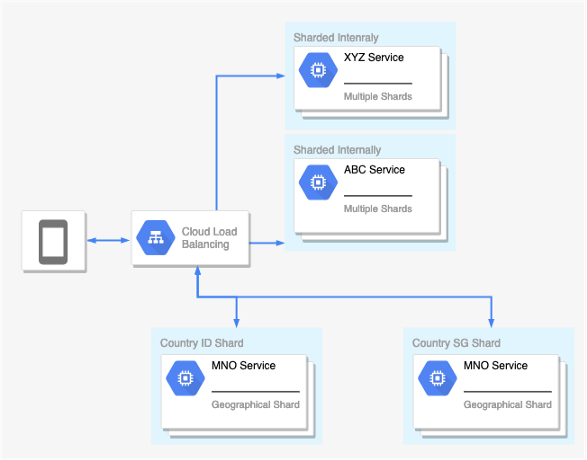

Here’s the TL;DR: Weaver is an HTTP reverse proxy with dynamic sharding strategies, written in Go. One of the challenges we face at GOJEK is the high volume of concurrent requests, which can be really tough to handle at scale if you don’t shard your clusters.

There are multiple ways to shard your request, which include geographical sharding, algorithmic sharding based on order_id or customer_id, dynamic sharding and entity-based sharding. As mentioned before, Weaver specialises in dynamic sharding.

This post covers how to deploy Weaver onto Kubernetes and explains strategies to shard requests among different sample services.

Recently we launched our GO-CAR product to users in Singapore. During the analysis, we decided to go with the same cluster we use in Indonesia, but have different instances of the service as different shards.

This architectural topology was adopted for multiple reasons, which include:

- Achieving failure isolation across geographical boundaries.

- Scaling systems independently of one another.

- Having shard-specific configurations.

This is where Weaver plays an important role. We created another replica of the service with different configurations, and deployed Weaver in front of the service clusters. Weaver was then configured to shard the requests based on different strategies (in this instance, based on geographical location).

Sharding using Weaver

Weaver can shard based on multiple strategies like header lookup, key in body, hashring, and more. These are documented here.

Weaver can be configured using a simple JSON file (referred as Weaver ACLs).

Deploying Weaver to Kubernetes

You can use the helm charts available on our GitHub to deploy Weaver. Let’s start by setting up minikube on the dev machine (you can follow the instructions from Kubernetes official website).

Once you clone the repo, deploying to Kubernetes is pretty simple. All you have to do is start Kubernetes and deploy using helm. Once you have done the setup by following the links mentioned above, verify that minikube is installed.

Let’s start minikube which will start a Kubernetes cluster in your dev machine. If your setup is fine, you will be greeted with Everything looks great. Please enjoy minikube! message.

You can see that our cluster is up and running and kubectl is configured correctly. We will be deploying using helm (please follow helm official docs to install). Once you have helm installed in your dev machine run the following commands to start working with helm.

# To begin working with Helm

helm init

minikube dashboardYou will be able to see the Kubernetes dashboard in your default browser.

Now, we are good to deploy Weaver to Kubernetes.

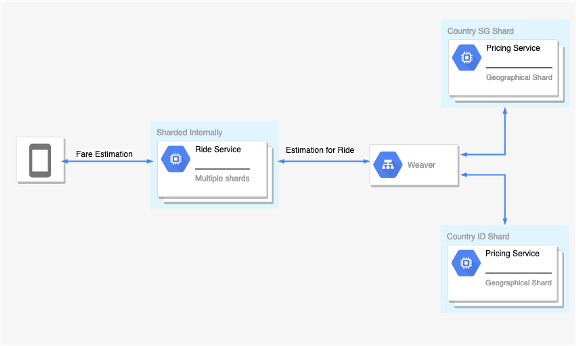

In this exercise, we will be releasing a simple internally-sharded service which gives us an estimate for a ride.

A consumer asks for an estimate for a ride in a particular country_code, based on which Weaver will route the request to different shard. To better understand the request routing path, refer to the image below.

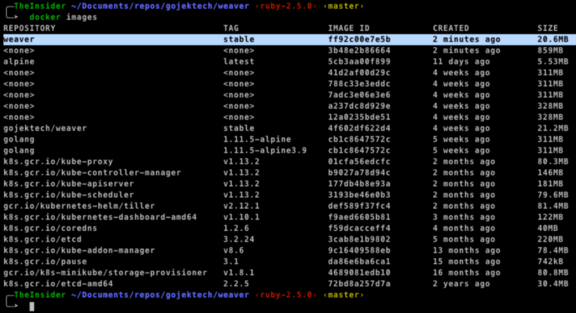

Before continuing further, let’s build a dev docker image for Weaver so that it will test this experiment on the latest master branch.

# Connect to minikube docker engine

eval $(minikube docker-env)

# Builds weaver docker image and tags it as stable

docker build . -t gojektech/weaver:stable # To have latest build.Please be aware that eval $(minikube docker-env) works only for that shell as it is local to the session.

If the above commands are executed successfully, a Weaver image should be present in the minikube docker environment, as represented below:

By now we have everything set up. Time to deploy Weaver.

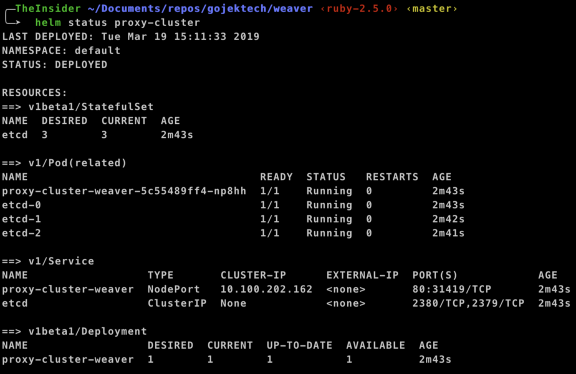

helm upgrade --debug --install proxy-cluster ./deployment/weaver --set service.type=NodePort -f ./deployment/weaver/values-env.yamlLet’s breakdown the command and understand what we are doing. We are upgrading a release candidate named proxy-cluster and --install says install if the release is not present. We are setting service.type to NodePort so that we can connect from a host machine. To get the status of the deployment you can run:

helm status proxy-clusterThis will output something very similar to the below image. Please check that all yours are pods in RUNNING status.

We have successfully deployed Weaver to Kubernetes and are watching /weaver/acls actively for any changes in ACLs. It’s time to deploy simple services and add ACL to see Weaver in action.

Deploying A Simple Service

We have this simple service called estimator which estimates the amount it takes for a given ride. This is just a random number between min cap and max cap which is presented as the estimated amount for a request.

This is simplified version of estimating a price, all it can do is to estimate the amount, and return the response that contains the estimated amount and currency, which a shard level config. So, we are going to build a docker image for this simple service and deploy it as two shards. One is Indonesianshard where currency is configured as IDR and Singapore shard where currency is configured as SGD.

We will build this docker image using the docker file mentioned below and deploy this to two clusters. All the code and charts to deploy this are available under examples in GitHub public repo.

$ eval $(minikube docker-env)

$ docker build ./examples/body_lookup -t estimator:stable

# Deploying to SG Cluster

helm upgrade --debug --install singapore-cluster ./examples/body_lookup/estimator -f ./examples/body_lookup/estimator/values-sg.yaml

# Deploy to ID Cluster

helm upgrade --debug --install indonesia-cluster ./examples/body_lookup/estimator -f ./examples/body_lookup/estimator/values-id.yamlBelow is the Dockerfile we used to build the docker image. Once you built the docker image using the above command, deploy it to singapore-cluster and indonesia-cluster. You can check the status of deployments using helm as we have done for Weaver.

Now we have an Estimate Service deployed to Kubernetes and exposed internally using ClusterIP. These services are sharded, without knowledge about one another. Let’s deploy an ACL to tell Weaver to route the request to Singapore Cluster if request body contains currency whose value is equals to SGD and to Indonesian Cluster if request body contains currency with value equals to IDR. You can exec into either of the estimate service pods and make a curl request to see the response. Regardless of the country you send, the service returns configured currency.

Even though our customer is requesting an estimate only in a particular currency, regardless of that we are returning configured currency. Weaver handles this using body_lookup. Below is the ACL that we apply to etcd, which also depicts Weaver loading the ACL and shard requests.

{

"id": "estimator",

"criterion" : "Method(`POST`) && Path(`/estimate`)",

"endpoint" : {

"shard_expr": ".currency",

"matcher": "body",

"shard_func": "lookup",

"shard_config": {

"IDR": {

"backend_name": "indonesia-cluster",

"backend":"http://indonesia-cluster-estimator"

},

"SGD": {

"backend_name": "singapore-cluster",

"backend":"http://singapore-cluster-estimator"

}

}

}

}To put it in simple English, when someone makes a POST request and the path is /estimate, we tell Weaver to use body matcher to find the backend using lookup, and shard requests accordingly based on the value of the expression .currency . To apply this to etcd, we have to port forward to connect to etcd deployed in Kubernetes.

# k port-forward svc/etcd 2379

Forwarding from 127.0.0.1:2379 -> 2379

Forwarding from [::1]:2379 -> 2379You should be able to reach etcd using localhost:2379. Make a curl request to see the health of etcd to ensure that our etcd cluster is properly up and running. Once it is done, we can set the ACL on etcd to see Weaver loading it up and start serving traffic without any restarts required.

As you see, Weaver just picked up the ACL we have applied and started serving the traffic without restarts.

Conclusion:

Weaver is written to solve a particular use case, and has pretty much become the general solution to solve sharding problems at GOJEK.

Reminder: Weaver is open source. Find it here: 👇

Hope you enjoyed this post and that it proved useful. Please do share your feedback and let us know what you think. 👌

Whether it’s products for people or tools to help us work better, building stuff is what we do. It’s what we do best, as evidenced by how we built 19+ products in 3 years, and expanded to three new countries. We’re not done though, and we’re looking for the best talent to help us do more. Want to give us a hand? Visit gojek.jobs and grab the chance to work with us. 🙌