Service Networking in a Hybrid Infrastructure

The first in a series of posts on challenges faced while migrating services and workload to containers

By Praveen Shukla

In the land of the Super App, we are working on migrating all our services and workload to Containers. We are primarily on GKE and GCE. While migrating to Containers, we faced some challenges running high throughput services. This included service discovery between existing VM based infra and Kubernetes, managing multi cluster deployments, feedbacks, observability around containers and service reachability while adopting Kubernetes. This series of blog posts is an attempt to share our learnings, how we tackle such issues and our solutions.

TL;DR: In this post I will talk about communication issues where applications running on VMs are not able to connect to the service IP of the application running on Kubernetes.

Problem Description

VMs can’t talk to containers directly because VMs’ IP range is different from kubernetes cluster’s IP range since they are on a Kubernetes overlay network.

Solutions

Attempt 1:

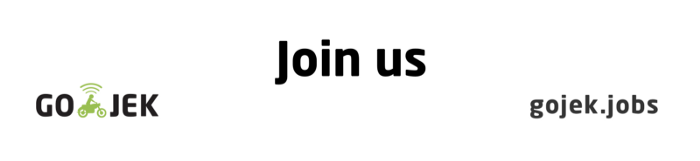

Applications with service type LoadBalancer using Google internal load balancer

To solve internal service connectivity from VMs, one option was to use Google’s Internal load balancer in front of every Kubernetes service since we are on Google Cloud.

Cons: There is a limit of 50 per GCP project and we have 500+ microservices. Although all are not on containers, this isn’t an ideal solution to consider because one day we will hit this limit.

Attempt 2:

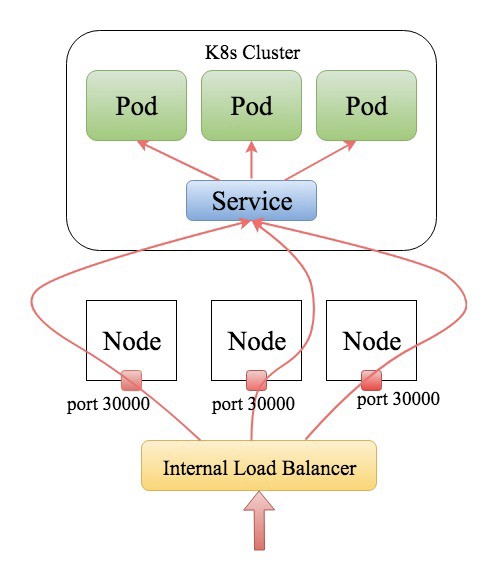

Add Routes on VMs

We added a route in the VPC network for Kubernetes cluster IP address range pointing it to one of the Kubernetes cluster nodes. The iptables rules set by kube-proxy in Kubernetes cluster nodes takes care of the rest.

But this solution has a limitation. Let’s say the Kubernetes cluster nodes go down. The route which added to a specific host is no more available. Hence, we can’t consider this solution for production grade systems even if we have redundant routes with different precedence to the remaining nodes.

Attempt 3:

Host based routing

Then we tried using Kubernetes ingress with Nginx controller which does host-based routing. Nginx controller deploys its own service which uses Google internal load balancer and does host-based routing using Nginx in the backend. This gave us the capability to use only one Google internal load balancer for all the applications. So, let’s deep dive into how we actually used Nginx controller for host based routing.

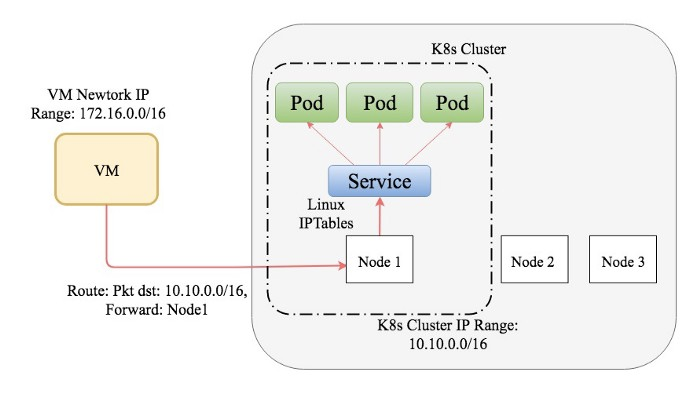

Ingress is a set of rules that allows cluster inbound traffic. Ingress looks something like the below diagram:

I will cover ‘how to use ingress’, before we deep dive into ingress. It’s better to understand that ingress in beta resource and not available in prior 1.1 kubernetes version. GKE (Google Kubernetes Engine) provides ingress controller by default. We can have any number of ingress controllers in a cluster with proper annotation.

There are so many open source ingress controllers available in the market:

- Ingress GCE (default in GKE)

- Nginx Controller(maintained by kubernetes)

- Contour Ingress controller

- Kong Ingress controller

- Traefik Ingress controller

- NginxInc’s Ingress controller

- Many more…

Minimal Ingress Resources look like this

How to setup an internal ingress

In order to setup an internal ingress, we need to understand ingress controller. We are using Nginx ingress controller. It does virtual name based routing using Nginx.

Ingress can be both internal and external. For external facing services we are using external ingress. For internal communication we use internal ingress name based virtual hosting ingress.

We use Helm to manage ingress objects

Helm is package manager for Kubernetes. Helm is equivalent to apt or yum in the OS packaging world.

Let’s install Nginx controller using helm

- Install helm client - Refer installation guide

- Make sure you are connected to your Kubernetes cluster

kubectl config current-context

foobar-cluster3. helm init command to install tiller server. Tiller is the server component of Helm which runs inside the Kubernetes cluster and manages releases/installation of charts.

4. Since we are going to be using the official Helm chart repository for installing ingress controller, we have to add a public Helm repo.

helm repo add stable https://kubernetes-charts.storage.googleapis.com/5. Use Helm install command to install Nginx controller with annotation overrides to Internal.

helm install --name nginx stable/nginx-ingress -f values.yml

NAME: nginx

LAST DEPLOYED: Thu Jul 26 22:30:34 2018

NAMESPACE: default

STATUS: DEPLOYED

...🎉 Ingress controller in installed.

Note: Since we wanted to use Google internal load balancer, it’s exactly why we used load-balancer-type Internal.

Let’s verify that Nginx controller is installed in the cluster.

⇒ kubectl get svc | grep nginx

nginx-nginx-ingress-controller LoadBalancer 10.10.247.234 172.16.0.4 80:30149/TCP,443:30744/TCP 7m

nginx-nginx-ingress-default-backend ClusterIP 10.10.245.46 Nginx controller launches 2 different services, nginx-nginx-ingress-controller is nginx service which does domain based routing and another nginx-nginx-ingress-default-backend is default nginx backend.

The types of Nginx controller’s service is LoadBalancer, which on GCP translates to Google internal load balancer, and service/pods are responsible for domain based routing.

Although we are using LoadBalancer types for service, we still need to expose nginx services using NodePort so the load balancer can reach to services inside the cluster.

We have to create an ingress resource which has foobar.mydomain.com as the domain name and foobar-service as its backend. After creating this resource with nginx annotations, ingress controller will watch this object and fetch the endpoint to generate the nginx config:

Similarly, for other applications we can update this object with domain name and service. Nginx config will get updated based on this ingress resource. So in this attempt we used only one internal Google load balancer and that’s how VMs are able to connect to applications running on Kubernetes.

Stay tuned for my next blog post, where I will be discussing multi-cluster service discovery in Kubernetes.

Liked what you read? Any ideas or tips on how to do this better? Would love to know more, please leave a comment below. GO-JEK is a playground for engineers, join us and help us build a Super App of 18+ products that’s expanding to other geos! Head to gojek.jobs for more.

References: https://kubernetes.io/docs/concepts/services-networking/ingress/