Lessons from Doing a Zero Downtime Migration

By Baskara Patria

In early 2017, GO-FOOD had to migrate all requests coming to an old service. The challenge was to do this without any downtime that would affect our merchant base and existing customers who want to order food. Both are Ruby on Rails based apps built on top of PostgreSQL as their database. Let’s call these two services “Service A” and “Service B”.

To give you an idea of how this works, some basics: A customer orders food from his/her favourite restaurant, our driver picks it up and delivers. Easy? Not really. Some merchants don’t update the price of the food, some customers want more/less food etc… In short, there are a dozen things that could go wrong creating a disparity between what the customer sees as a final price on the phone and want the driver has to pay.

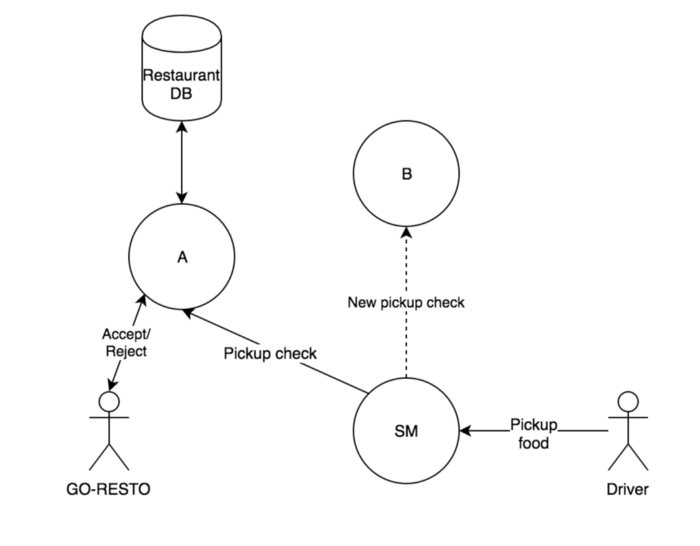

The task was to check whether a GO-FOOD orders coming from an Order Management Service (SM) should be sent to GO-RESTO, our merchant-facing mobile app. By getting this, GO-RESTO will show the total price of the food and a merchant can decide whether they have to reject the order, if the price is say, incorrect, or accept it.

Why we migrated?

Service A was built in less than one month because we wanted a Minimum Viable Product to see whether merchants are helped by using GO-RESTO to manage their overall GO-FOOD orders. We took the liberty to make some stop-gap measures to ship this out asap.

Service A did everything from authentication, authorization, maintaining orders, and moving money between the driver-customer-merchant using our digital wallet, GO-PAY. Once the idea of GO-RESTO was validated, we wanted a stronger and final product build. We wanted to move the maintaining orders part and money movement into the right service, the actual source of truth, service B.

The requirements

The two main technical requirements we needed to cater are zero downtime and quick rollback. The switch should happen without affecting our customers, drivers, and merchants with the GO-RESTO mobile app. At this point, Service B had not been consumed in production at all. The other challenge was to assure that traffic will switch back to service A immediately should something go wrong. So there had to be a mechanism for two-way data replication.

What we did

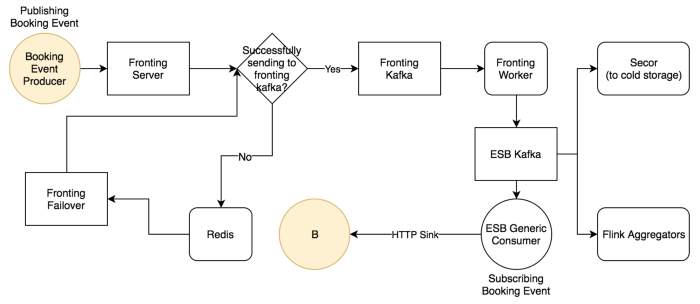

In GO-JEK, every order state (searching for a driver, order placed, picking-up food etc…) changes results in an event getting published to Kafka. The diagram above shows the hose that our Data Engineering Team built, explaining the big picture of how the event gets published and reaches B. We made service B listening to a kafka topic (through a what we call ESB Generic Consumer) which gives us any of this event. Whenever there is an update, we persist the order in its own storage. At this state, we have all replica of orders in service B. Then we needed to make SM know whether to call service A or service B.

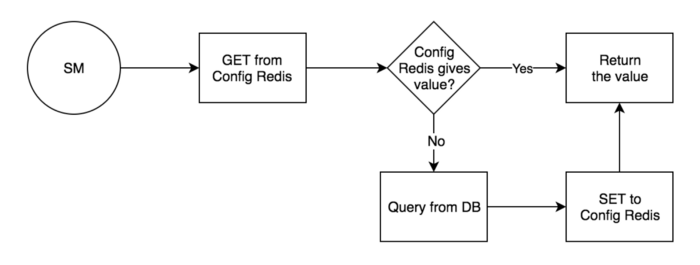

We did not go with an approach where this config is stored as an environment variable file that requires us to restart the service inside the box to change the behaviour of the system. Luckily, SM has a nice config management which allows us to change it using an API call and the change gets reflected immediately. These configs are stored in both database and redis. Whenever this API gets called, the value inside database gets updated and also invalidates the one in redis. So from the SM point of view, it was already possible to switch whether Service A or Service B was to be called to determine if an order is to be picked. This was a major advantage and helped us come closer to zero downtime.

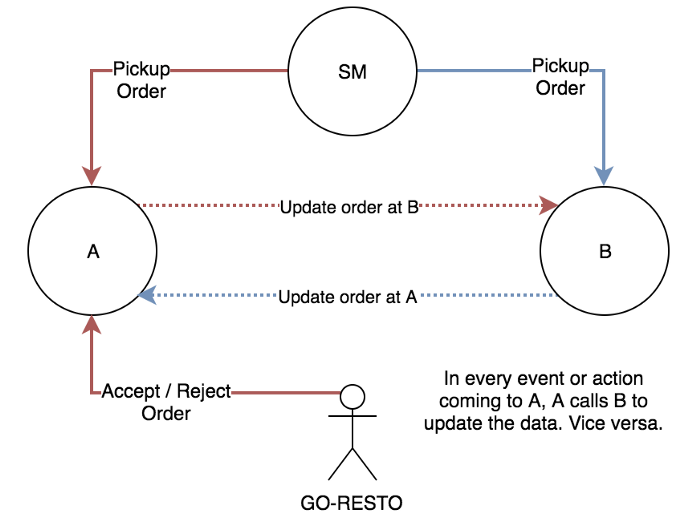

We knew that A and B have their own database and needed to keep their db synced without taking too much time building this temporary solution. The reason why it’s temporary is because as soon as service B is proven to work well without issues, we don’t need service A to store the orders. What we did after that was creating APIs on both service A and service B that they called each other whenever SM or GO-RESTO mobile app calls any of these. So, service A and service B were having the same data and we were good until this point.

We reached a situation where two services have the same data of orders and the caller (SM) can switch which one to call easily. We then needed to build toggles on every public APIs in service A that was initially using it’s own storage to serve the request would now need to be served by service B. We had to make every call from a mobile app still go through service A, but internally service A calls service B. So service A became a proxy with authentication and authorisation functionalities.

We switched all traffic at the lowest average throughput (around midnight at Jakarta time) and everything went smoothly as it turned out we did not have to do any rollback. Service B is not a heavy duty service in GO-JEK. As of now, it is only serving around ~15.000 rpm on average with around 30.000 rpm during the peak. We removed the service A and service B syncing codes after a few days we did the migration.

Some learnings:

- Consider not using environment-variable toggles as they are coupled to single box for a full switch migration. In our sample, we persisted the config value in central storages (database and redis) which will be reflected on all application boxes. Whenever there is an update to the database, it also invalidates the one on redis. The app just needs to read the value from redis at anytime, so it is fast and avoids bringing extra load to a database.

- Always prepare the rollback plan during a migration even if you think the worst might not happen. And this is not only for the example I worked with, but on everything that touches the production environment. In traffic, migration can only be achieved if the old service and the new designated service have the same records. It can be done by reading and writing to the same data source (database) or by simply replicating the data between these two services.

Did this help in any way? Any suggestions, inputs? Would love to hear your thoughts. And before I forget, we’re hiring. Come, join us and help build beautiful products at enormous scale. Visit gojek.io for more.