How To Set Up A GKE Private K8S Cluster - Part 1

Setting up GKE Private K8S cluster and exposing services through NEG & IAP.

By Rohit Sehgal

If you’ve ever set up a GKE Private cluster, you’d agree it’s extremely tricky and you’ll encounter a barrage of questions.

Why do we even want to have private cluster?

What is the issue with public cluster?

To access GKE managed clusters, you need to have client credentials. Does this mean GKE public cluster is not secure?

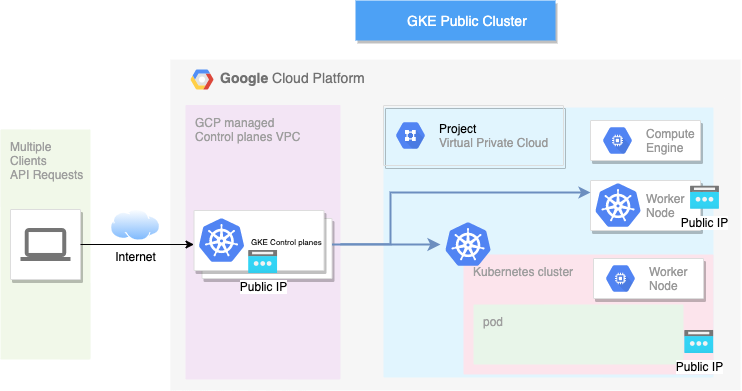

Here’s what happens: When you spin up a public cluster, the GCP spin ups Control Plane and Worker Nodes. In that, each of Control Plane and Worker Nodes get a Public IP address. Yes, each one of them. 🤷♂️

Control Plane is the server which is protected with credentials, but this isn’t the case with other nodes. Default project network setting applies to worker nodes.

There would be a couple of security issues here:

- If you have not configured the firewall rules on VPC, the nodes may get exposed to public internet.

- Service Configuration with NodePort type will bound the service running on pod to node, and since nodes have public IP address, your service may get exposed to internet.

- Access to the cluster cannot be restricted to employees only. Anyone having cluster credentials can access it, if you have not configured RBAC on the cluster.

- There’s possibility of insider threat. If an employee leaves the organisation, there is no way to restrict access to the public cluster with the credentials it might have taken.

- If there exists a Zero Day in GKE or K8s regarding authentication and authorisation, this can get exploited as our Control Plane IP address is public.

As a general guideline, it is not recommended to run the cluster in public settings. GKE provides setting with non-public cluster with several configurations. The configurations are as follows:

- GKE public cluster

- GKE private cluster with public access to control plane: In this case, worker nodes do not get public IP address, and control planes get the public IP address with no restrictions as in any one can access the control plane API if they have credentials.

- GKE private cluster with control plane exposed to internet but only authorised networks can access K8s APIs: Control planes have public IP address but only specific public networks can access it, worker nodes are still on private network.

- GKE completely private cluster: Control plane is not at all accessible from outside and worker nodes are again on private network.

In this blog, I’ll detail how to set up the private cluster and setting a jump host to access private cluster.

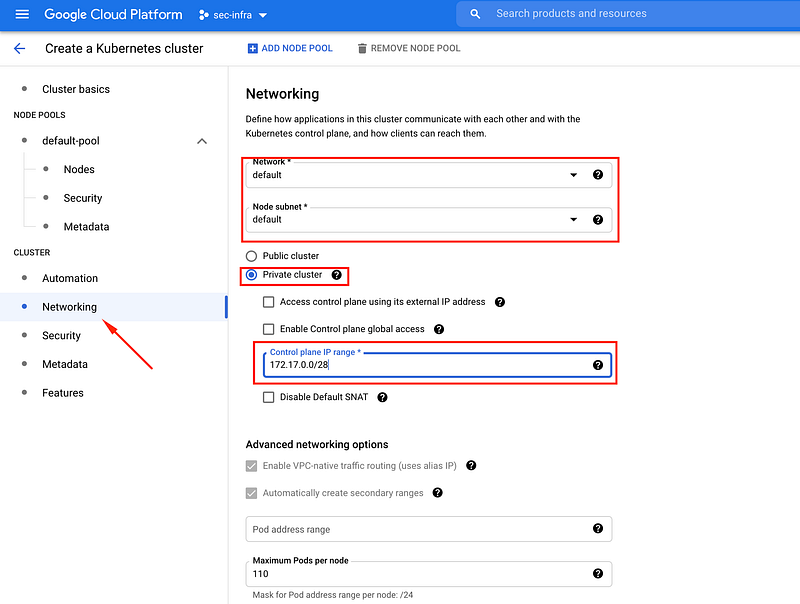

- Let’s create a private cluster with GKE console. Select

Standard Configuration->Network

- Select the network and subnetwork you want the worker nodes to be in. Since I have

defaultnetwork I am picking this name. - Select

Private Clusterand make sure you untickEnable Control Plane Global Accessthis will make sure your cluster’s control plane will not be accessible from outside VPC. - Give IP address range to control plane. Make Sure to give a private IP address. I have used

172.17.0.0/28/28bit mask is required.

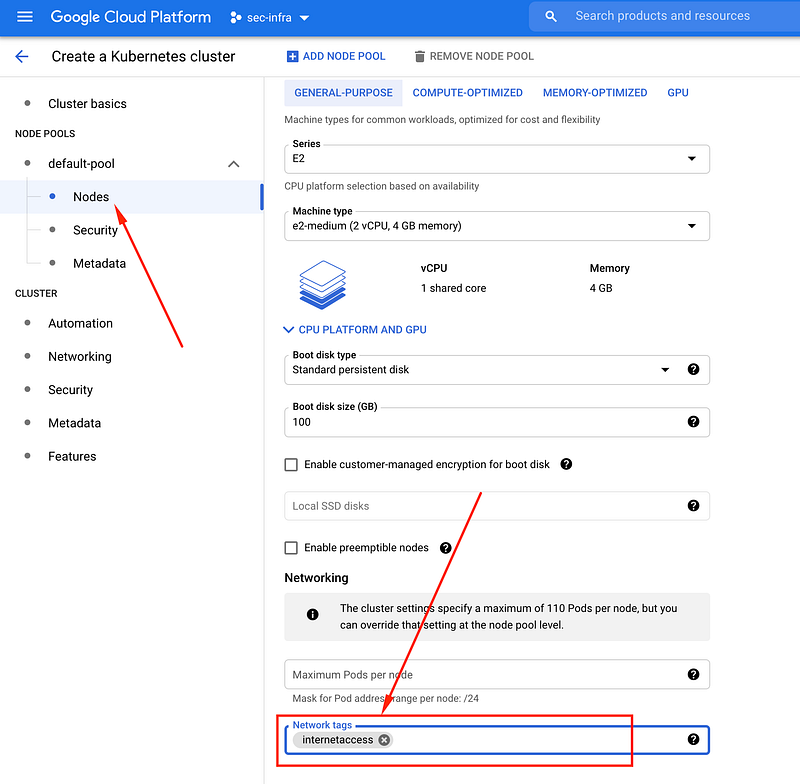

2. Next step is to setup the network configuration for worker nodes. I usually make sure to apply all the nodes with default internet egress access. Why? At times I need to pull docker images from docker hub and for that egress access is required.

Best approach for that is to use firewall tags. Create a firewall tag, which applied to any VM will make sure to enable egress traffic to internet. Click default pool -> nodes and apply those tags here.

These tags will be applied on all the nodes inside this cluster node pool.

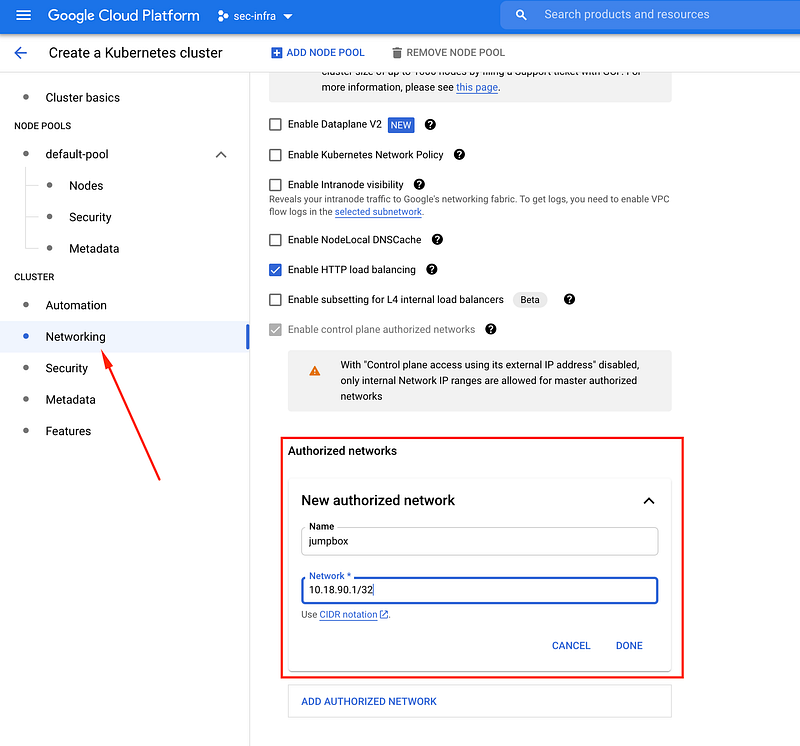

3. Third step is to create a JumpBox and adding Jumpbox private IP address to authorised network of this cluster to have access from. JumpBox is a Compute Engine instance running a proxy service. IP address of this CE need not to be public, it is recommend to use private compute and its private IP address to make a call to K8s API. I would encourage giving exact IP address instead of IP range, in Authorised Network.

- I already have a private CE VM running so I will be using it, but I need to install a simple proxy to route all my requests. Any HTTP/HTTPS proxy should work, I used : https://tinyproxy.github.io/ in my case.

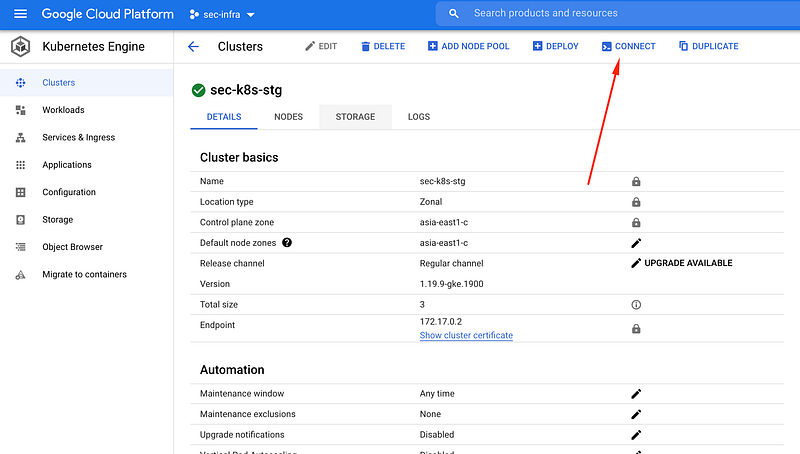

4. Spin up the cluster and get the credentials. Click connect and run the command which pops up.

After running this command the access is done on your VM, now you need to make a request to the control plane through jumpbox And this you can achieve by making a tunnel to jumpbox over VPN through SSH and making call to K8s APIs with following environment.HTTP_PROXY=localhost:8888 kubectl get ns

Note: I have tunnel setup from my local system to jumpbox like ssh -L 8888:localhost:8888 -v user@jumpbox

I alias k to HTTP_PROXY=localhost:8888 kubectl.

In the next part of the blog, we will see how we can expose the service running in private cluster with security settings. Watch this space!

Find more stories from our vault, here.

Also, we’re hiring! Check out open job positions by clicking below: