Hacks And Tips To Deploy CTFd In K8s

How our Product Security Team went about CTFd Deployment In K8S with GKE and behind IAP, for our internal hackathon.

By Rohit Sehgal

Our Product Security team recently hosted a Security Conference called GoSecCon which was a treasure trove of information through internal and external talks. One of the most exciting things that happened during the conference was a two-day long CTF (Capture The Flag) hacking challenge called GoTroopsGoHack.

Hosting GoTroopsGoHack was a challenging task in itself. Here are a few constraints:

- An obvious requirement: The CTF hosting environment should be foolproof and secure

- It should be exposed to the public internet but accessible only to Gojek domain users

- If something goes down/wrong, re-deployment should be quick and effortless

- In case noticeable load is seen on a specific CTF challenge, it can be scaled to handle more load

- The infrastructure of the CTF portal and CTF challenges should be portable

For the CTF portal, we picked the one which was battle-tested, lightweight, easy to deploy, and manageable. So, we went with CTFd.

To ensure exposure to the public internet and still only accessible to Gojek domain users, the only option was to go ahead with IAP.

And finally, as we all know, K8s is great when it comes to scalability, reliability, and portability of an infrastructure. Since Gojek extensively uses GCP, we chose a Google managed K8s engine, GKE, to help spin a cluster and run everything on that cluster.

The set-up, process and everything in between

Set up the GKE cluster with default configs, either in a public or private network. Once created, you would need to configure kubectl it to connect with that cluster. Kubectl could run in any machine; I used my dev system. Since I created a public cluster I could connect with K8s API in any machine.

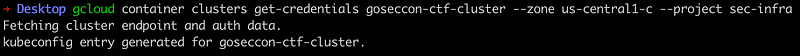

gcloud container clusters get-credentials goseccon-ctf-cluster --zone us-central1-c --project sec-infra

sec-infra is the name of the GCP project and goseccon-ctf-cluster is the name of the cluster created with GKE. If everything goes well, you can see an kubeconfig entry created, which will be used kubectl to connect to our cluster. You don't have to worry about how that connection works — this is the beauty of K8s APIs.

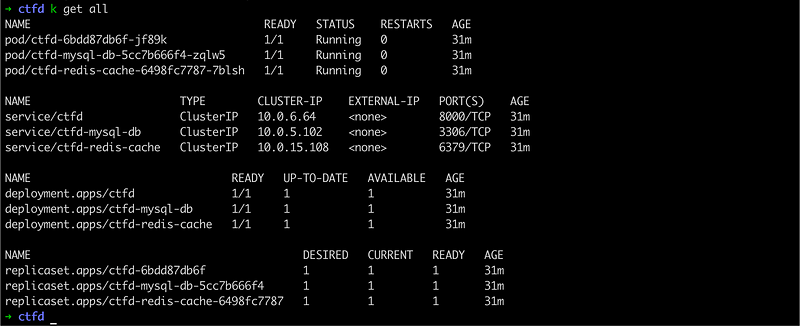

Hit kubectl get all to check the connection and creation of default namespace and services. If you see something like this, then we are great. Note here, in my local system I have kubectl aliased to k , you know why.

How about creating a separate namespace for the portal + challenges? Let’s name this ctfd and use it for all the further deployment.

$ kubectl create ns ctfd

$ kubectl config set-context --current --namespace=ctfdSo we are good with the setup of K8s APIs. The next step is to perform the deployment of the portal and challenges on this cluster.

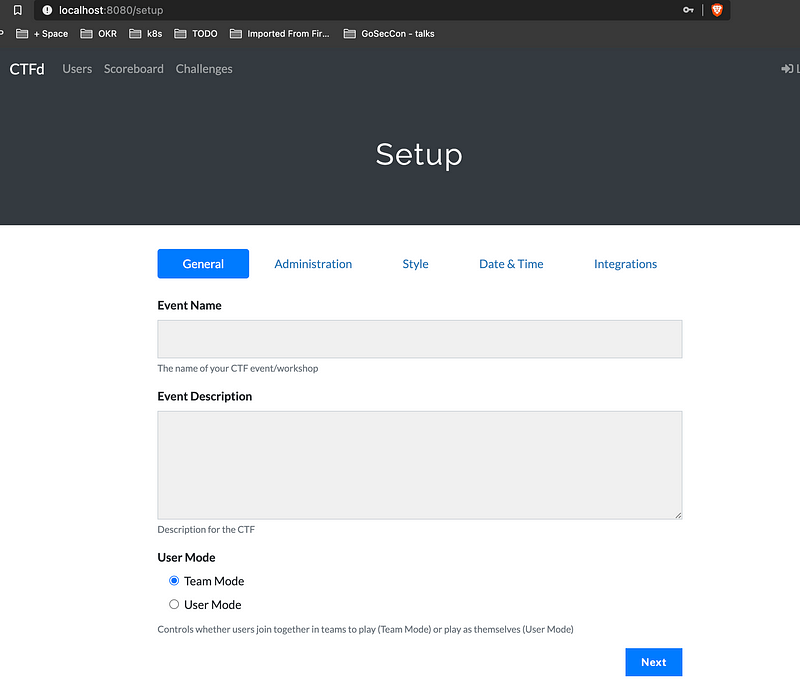

Setting up CTFd in our cluster

We will be using K8s commands extensively going ahead. So, if you are not comfortable with the Kubernetes command, I would encourage you to read my short practical series on Kubernetes-in-30-mins.

Let’s start with setting up the MySQL pods.

Copy this deployment file and apply it. This will create a PV and PVC which will get automatically get linked to the pod running MySQL instance.kubectl apply -f ctfd-mysql-deployment.yaml

Next, create a Redis instance for CTFd as cache so it performs better in terms of latency. Copy the below yaml and apply. Again linking is taken care of, in the spec.

Completing the back for the CTFd panel with actual CTFd deployment yaml.

Once you apply these three yaml, you will see the output from the cluster to be something like the below state.

The next and final step will be to expose this CTFd pod as a service through nginx pod and service. Here is the yaml for you to explore and apply.

After this step, Nginx will be running as a proxy to CTFd, but it will not be exposed to the public internet. If you want to check if Nginx is working fine or not, expose the service kubectl port-forward service/ctfd-nginx

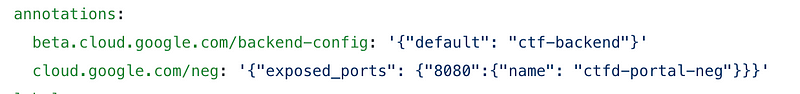

Did you notice the presence of network-endpoint-group (NEG) in each service spec? It’s required for LB, which is discussed below:

Exposing the serviceservice/ctfd-nginx to the public internet

This will be done through IAP. To expose any service running in GKE to IAP, there are two routes:

- With ingress-controllers

- LB with NEG

Long(ish) note:

There isn’t just one but various ingress-controllers for K8s. The full list of ingress controllers can be found out here. Each ingress controller is configured differently, I had tried exploring this route for close to 3 days and was not able to make it behind the IAP. I was able to install the ngnix-controller to get the IP for external access but was unable to get provision Google Managed Certificate, Map that to LB, and put that behind IAP. I did with a range of ingress controllers.Reiterating: Each ingress controller is configured differently and those docs can be found out of specific ingress controllers. This route did not work for me. 3 days of this time spent was great learning about the controller and how to implement them on K8S.

The next route is what I identified and architected with keeping Kubernetes’ concept of exposing service through NEG in mind. I exposed each services through the public internet with IAP, and exposed NEG for that. All our services(here only CTFd service) have NEG working properly.

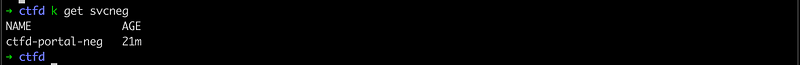

Get the list with kubectl get svcneg. This command is great for debugging purposes.

Configuring HTTPS LB

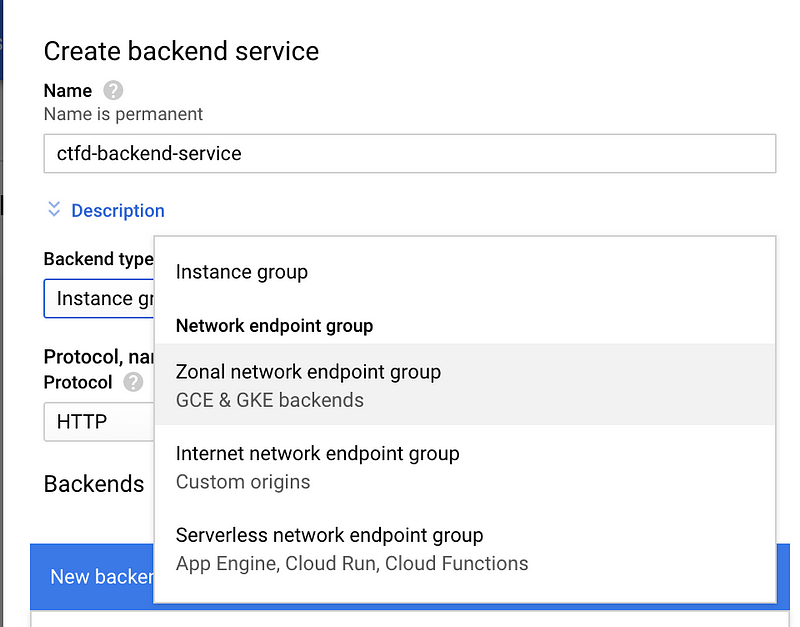

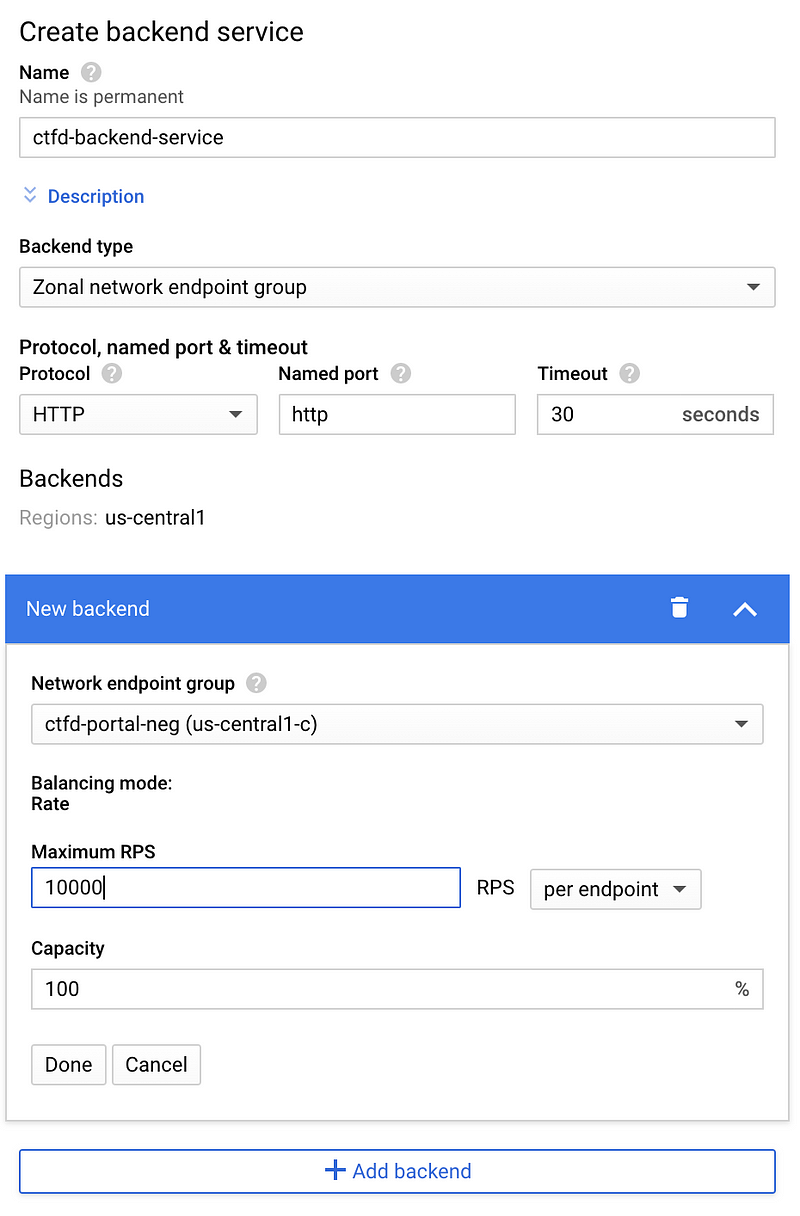

We need to expose our apps running in NEG through LB and can configure the LB to be behind IAP, with just one click. Now, let's get the LB configured. It is going to be a lengthy but fruitful process. Start by creating an LB and in backend config create a backend service for the NEG.

You need to do this backend service creation step for each service that we want to expose.

Pick the NEG from the list under new backend.

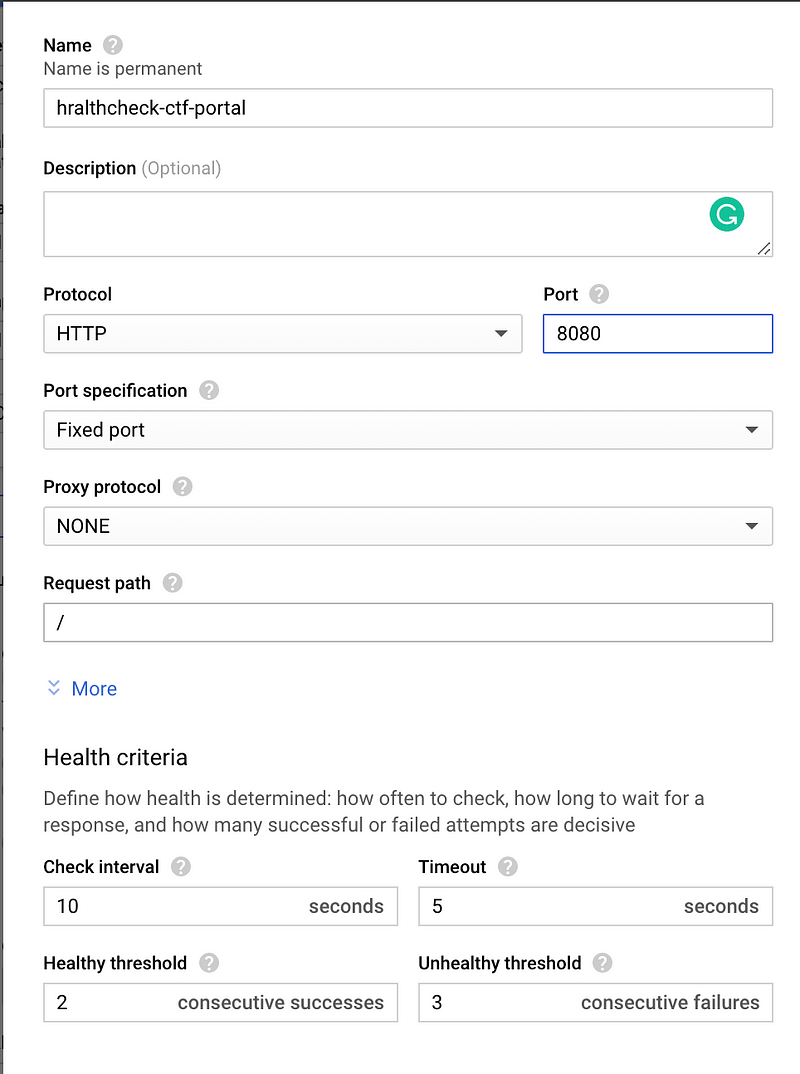

The next most critical thing is to create health check. Without health check, LB will not pass the request to NEG. While creating a health check, just note two things:

- Health check for NEG should be configured with

targetPortin-service configuration. Yes, this can be confusing, I had spent a great amount of time identifying. In short, this health check should be on targetPort of service rather than exposed port. - Make sure the firewall ports are open between LB and NEG nodes. This can be achieved with the below command. Since I know I will be having only two targetPorts in services, which will be on 80 and 8080, I am exposing those ports only.gcloud compute firewall-rules create fw-allow-health-check-and-proxy \

--network=default \

--action=allow \

--direction=ingress \

--target-tags=gke-goseccon-cluster-6d21d830-node\

--source-ranges=130.211.0.0/22,35.191.0.0/16 \

--rules="tcp:80,tcp:8080"

For the CTFd Nginx service, this is how the Health check would look like.

And that’s it!

Deploy the all web challenges like this and create the service for them with NEG and backend services in a similar way. Make sure LB routes redirect to the challenges.

If you want to redeploy the same CTF with the same chals, consider deploying all the specs from this repo.

If you have configured the LB the way I have done, configuring IAP will be really easy —It’s a single click operation.

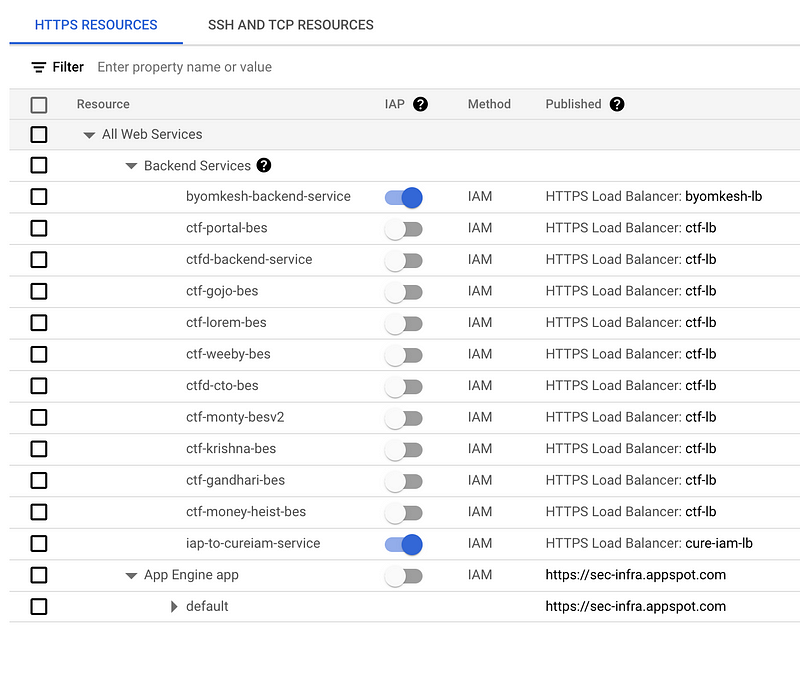

Go to the IAP page and you will see the list of the backend service you would have created. Now, clicking the enable button will enable the IAP on the backend service. Simple enough. 💁♂️

You might need to create an OAuth client ID in case you’re configuring the IAP for the first time. This is the standard process and can be followed from standard docs.

After everything is done, as a last step, check if the services are accessible on public internet, with or without IAP. 🖖

A big thanks to other members of the ProdSec team at Gojek: Sneha Anand, Alisha Gupta, Aseem Shrey, and Sanjog Panda for creating CTF challenges and Varun Joshi for helping with debugging issues with GKE and K8s.

Here are more stories about how we do what we do. To view open job positions, click below: