Demystifying the Packet Flow in Istio — Part 1

Our learnings about Istio’s packet flow and how it manages the immense traffic.

By Saptanto Utomo

At Gojek, we have deployed Istio 1.4 with multi-cluster setup in production, integrated with a few services which have throughput as high as ~195K requests/min, and create an orchestration on top of it to utilise Istio canary deployment. This is Part-1 of a two-part series where we’ll demystify the packet flow in Istio.

If you would first want to read about our learnings from running Istio’s networking APIs in production, head to the blog below. 👇

Before we decided to deploy Istio, we had a simple question in mind:

If Istio does its magic to all our packets traffic, then how does it manage this traffic, to begin with?

Answering this question helped us understand how each feature is implemented in Istio and how the debugging is handled, if there is an issue.

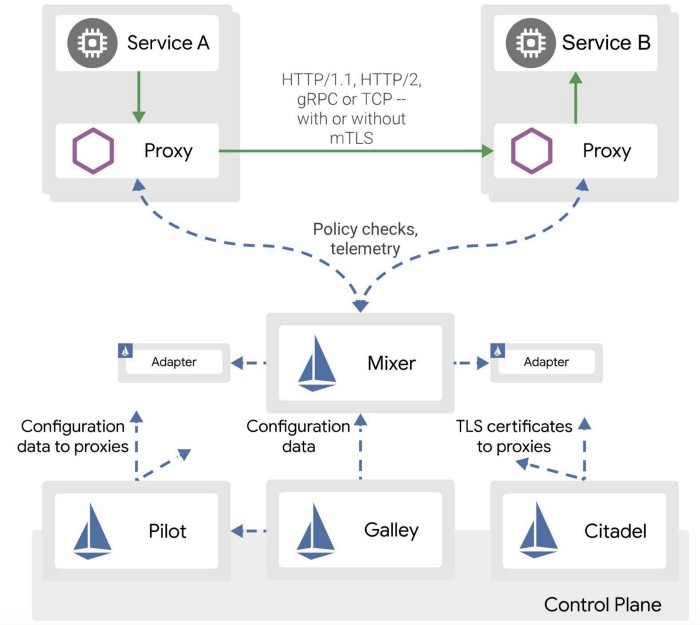

Istio, as a service mesh, consists of two major components:

Control plane: Manages and configures the data plane to route traffic.

Data plane: Composed of a set of intelligent proxies (Envoy) deployed as sidecars. The proxies mediate and control all network communication between microservices.

Istio Control Plane

Pilot

Istio Pilot is used for these purposes:

- Obtain services information from the service registry. Kubernetes or Consul can be used.

- Watch Kubernetes API for Istio CRD.

- Transform services information and Istio CRD into a format understandable by the data plane.

- Acting as Envoy xDS server for all Istio sidecars.

Istio doesn’t have its own service discovery mechanism. Instead, it relies on services such as Kubernetes or Consul. Istio has its Custom Resource Definition (CRD), which can be used for configuration such as defining traffic rules or extending the mesh to external services. This information will be translated by Pilot to a format understandable by the Data Plane which is Envoy. Envoy can be configured dynamically through xDS protocol, and Pilot acts as the xDS server, which is responsible to push the configuration to the Data Plane.

Istio Data Plane

Istio uses Envoy proxy as the Data Plane.

Envoy Basic Concepts

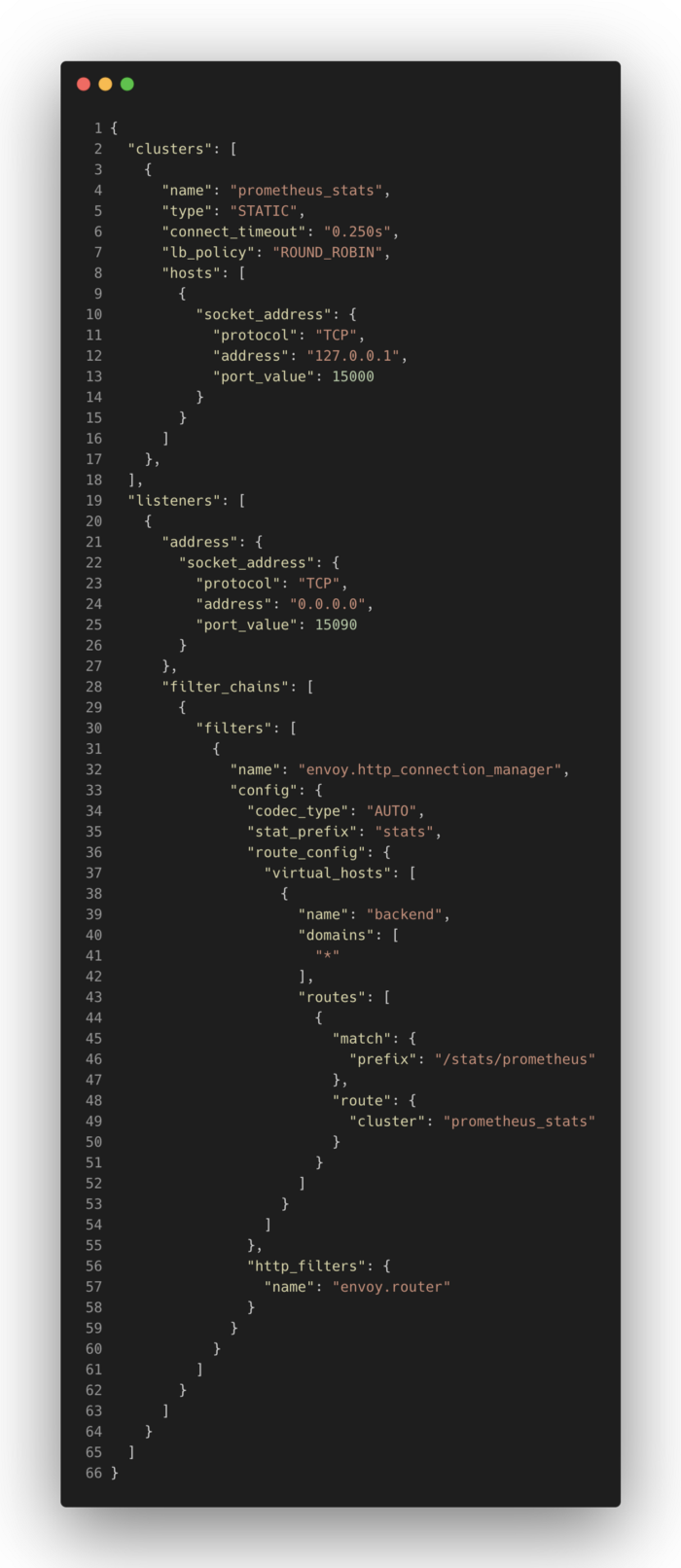

Here’s a sample envoy configuration to help us understand the basic concepts of Envoy:

In the above example, Envoy will be instructed to bind to port 15090 and proxy all incoming HTTP requests for any domain with /stats/prometheus URL path to localhost:15000 .

Let’s break down the configuration to a smaller chunk.

There are 4 basic building blocks in Envoy: Listeners, Filters, Routes, and Clusters.

First, there are listeners (line 19). This tells the Envoy to bind to a port, in this case, 15090. Below listeners, there are filters. Filters tell the listeners what to do with the requests they receive. You can chain multiple filters for each request it receives. Envoy has built-in network filters. In the above example (line 32), we are using envoy.http_connection_manager to proxy HTTP requests. envoy.http_connection_manager has a list of HTTP filters to further filter the requests made to the connection manager. In the above example (line 56), we are using envoy.router to route the requests as the instructions specified in the configured route table.

Routes have information on how to proxy the requests. In our example, we only have one route (line 36). You can specify list of domains it will match with the incoming request Host header. In this case, we match * to match any domain (line 41). For all matched requests, we can match the URL path and route them to a specific cluster.

Until this point, our Envoy is bound into port 15090 and proxying all incoming HTTP requests with /stats/prometheus URL path to prometheus_cluster (line 44). A Cluster is a list of endpoints which are the backends for a service. If you have 4 instances of a service, then you might end up with 4 addresses in a cluster. Our example is simple, with only one cluster with a single address which is localhost on port 15000 (line 2).

Envoy xDS

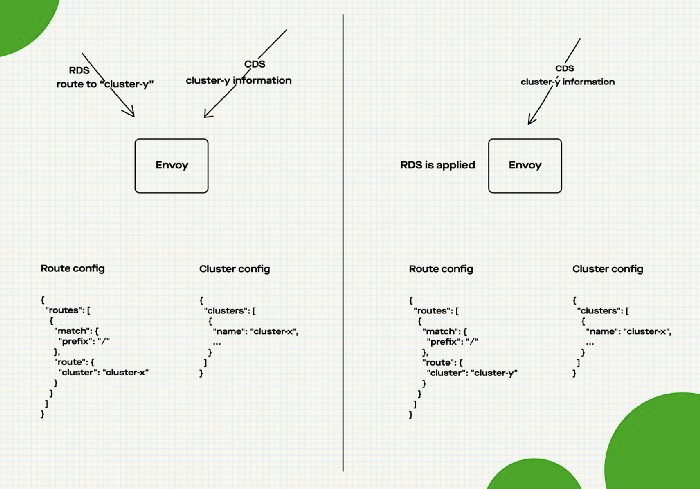

The four basic building block in Envoy can be configured using LDS for listeners and filters, RDS for routes, CDS for clusters, and EDS for endpoints. xDS stands for (Listener|Route|Cluster|Endpoint) discovery service.

xDS gives us the capability to dynamically configure Envoy. However, each discovery service is independent of each other and eventually consistent. There might be a case of a race condition where CDS/EDS only knows Cluster X and there is an update in the configuration of RDS to adjust the traffic of Cluster X to Cluster Y. If the Route configuration arrives before Cluster/Endpoint then there will be some traffic loss which should be routed to Cluster Y since there is no information about Cluster Y yet.

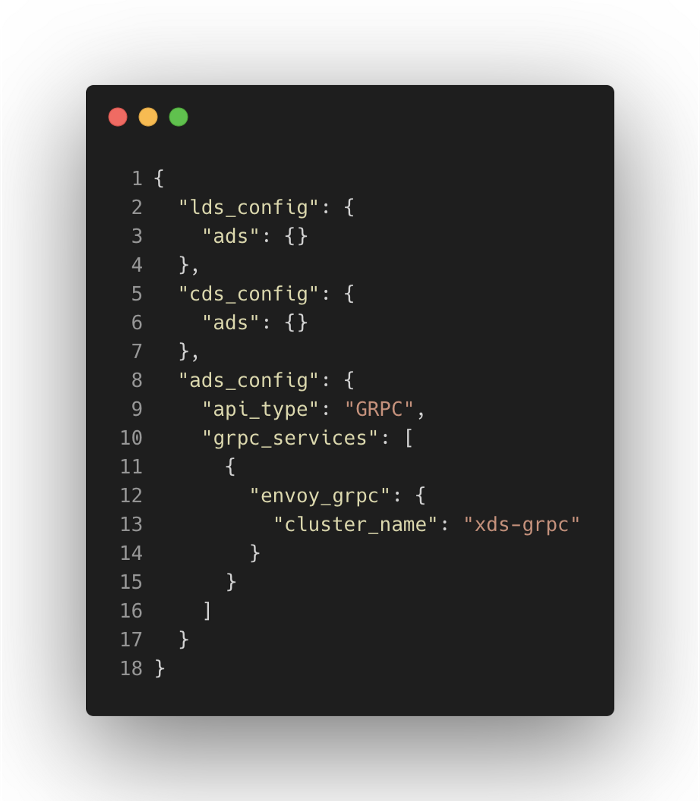

Aggregated Discovery Service

To overcome that problem, we have to ensure the consistency of data delivered by each discovery service, one way is to use the Aggregated Discovery Service (ADS). ADS publishes all configuration updates through a gRPC stream to ensure the calling sequence of each discovery service and avoid the inconsistency of configuration data caused by the update sequence of discovery service.

Istio Traffic Management

Understanding how Istio manages the traffic requires us to get a perspective about how Istio configures the data plane. First, let’s discern Istio sidecar configuration bootstrap.

Istio sidecar configuration bootstrap

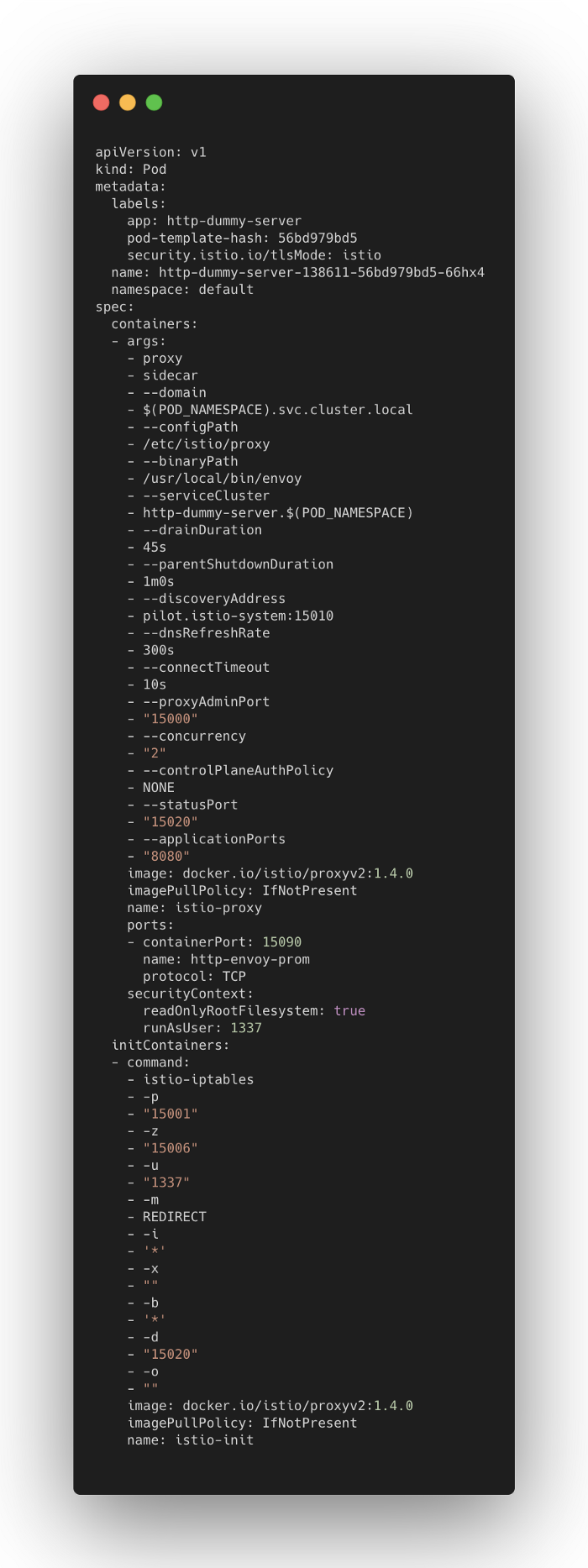

Istio implements automatic sidecar injection using K8s mutating webhook admission controller. When you set the istio-injection=enabled label on a namespace and the injection webhook is enabled, any new pods that are created in that namespace will automatically have an Istio sidecar added to the pod. It gives you two containers, one Istio sidecar container and one init container.

Below is sample pod specification snippet:

Istio Init

In the above snippet, there is an init container for http-dummypod. Init container is like a regular container, except it runs to completion and is executed before all regular containers.

This init container is responsible to set the ip-tables which contain chains of rules for how to treat network traffic packets. The command argument for init container isistio-iptables and you can check the source code for this command here. This command accepts these key parameters:

- Parameter

pfor specifying a port to which all outgoing TCP traffic to the pod/VM should be redirected to. The above value is 15001. - Parameter

zfor specifying a port to which all inbound TCP traffic to the pod/VM should be redirected to. The above value is 15006. - Parameter

ufor specifying the UID of the user for which the redirection is not applied. The above value is 1337, envoy’s own traffic as described insecurityContext.runAsUseristio-proxyspecification to avoid IPtable redirecting the data sent by Envoy to Envoy, forming an infinite loop. - Parameter

bfor specifying a list of inbound ports for which traffic is to be redirected to Envoy. The above value is*to redirect incoming traffic from all ports to Envoy.

All outgoing traffics will be redirected to Envoy in port 15001 and all inbound traffic to port 15006 with one exception: Envoy’s own traffic will not respect these rules.

Istio Proxy

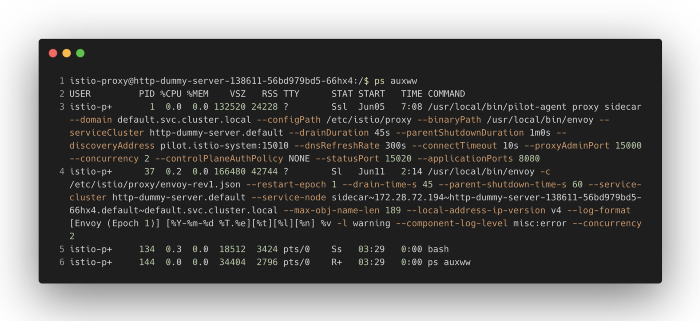

Here’s what’s inside the istio-proxy container and the processes running:

There are 2 processes inside this container: the Pilot-agent and the Envoy.

Envoy has xDS protocol to dynamically configure the resources, including listeners, filters, routes, clusters, and endpoints. These resources are fetched from the Istio control plane through xDS protocol. This is how all instances inside the mesh are able to know each other.

But, how does Istio proxy decipher where the xDS server is? 🤔

The pilot-agent process generates the initial configuration file of Envoy according to the passed arguments and the configuration information in the K8S API Server, and is responsible for starting the Envoy process. If you look closely, Envoy process is called with c argument to pass a file that will be parsed as bootstrap configuration. Here, we are passing /etc/istio/proxy/envoy-rev1.json file.

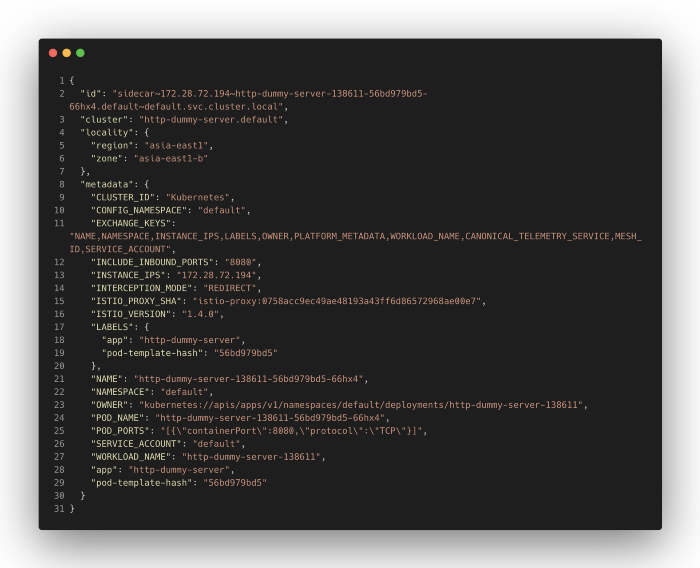

This file has five root keys:

node: The identity of the current node to present to the management server and for instance identification purposes (e.g.: in generated headers).

- stats_config: Configuration for internal processing of stats.

- admin: Configuration for the local administration HTTP server.

dynamic_resources: xDS configuration sources.

static_resources: Configure statically specified resources includingxds-grpccluster corresponds to ADS configuration mentioned in previous dynamic_resources. This indicates the server address used by Envoy to obtain dynamic resources. There is also one listener listening at port 15090 for prometheus stats endpoint, which will be routed to prometheus_stats static cluster.

From the bootstrap configuration, we can roughly see how Istio’s service discovery and traffic management happen through Envoy proxy. The control plane configures the xDS server and for each instance inside the mesh, Istio injects Istio init container and Istio proxy container.

Istio init sets the rules to redirect all outbound and inbound traffic through Istio proxy.

Before Envoy proxy starts inside the Istio proxy container, the Istio agent process initialises the bootstrap configuration which has static resource, and then starts Envoy proxy with the bootstrap configuration.

After Envoy proxy starts, it obtains dynamic configuration through xDS server, including services information and routing rules defined in the mesh.

In part-2 of the blog, we shall traverse through what the Istio sidecar dynamic configuration looks like. Stay tuned!

References

- https://zhaohuabing.com/post/2018–09–25-istio-traffic-management-impl-intro

- https://jvns.ca/blog/2018/10/27/envoy-basics