Customising VerneMQ - The Message Broker For Our Information Superhighway

Read on to know how we customised and built new features in VerneMQ and the challenges we faced.

By Swagat Parida

Keen readers of our blogs would know how we landed up on MQTT and especially VerneMQ as our broker of choice for building Courier, the information superhighway between mobile devices and our backend servers.

ICYMI, here’s something of a refresher:

As we spent more and more time running VerneMQ on our backend, we discovered that there were some features that would be awesome to have. Also, we observed a few limitations of VerneMQ that we would like to talk about. However, to understand these limitations, you will need to understand how VerneMQ operates in a clustered mode.

Challenges while running VerneMQ in Cluster mode

Like all scalable and highly available applications, it is quite easy to cluster VerneMQ. VerneMQ follows an eventual consistency model and the replication here is leaderless. What we explored while running a clustered deployment was that due to the replication being leaderless, unplanned node outages created a lot of problems. Most of the problems were seen when a node in the cluster got restarted. Here they go:

- The huge amount of messages being persisted for offline clients while this broker node was up became a huge limiting factor for quick recovery as the node would spend a lot of time in recovering this state from disk.

- The nodes that get restarted needed to synchronise their states with other nodes in the cluster. State here means messages and subscription information (which is shared state). But even after a lot of tuning, we noticed that this node would sometimes get stuck in a loop of synchronising state.

The only way to recover from this state was then to deploy the cluster afresh meaning we were anyways losing the messages and all subscriptions.

Apart from the above mentioned problems, we wanted some features that were not provided by the Open Source version.

- We wanted to decrease the time taken by the broker to be up and running, and serving the clients in case it got restarted.

- JWT based authentication.

- Enhanced ACLs. VerneMQ provides an implementation of ACLs but we wanted to add a few options of our own to these. We added ACLs options where we could tokenize clientIDs and usernames to verify their access.

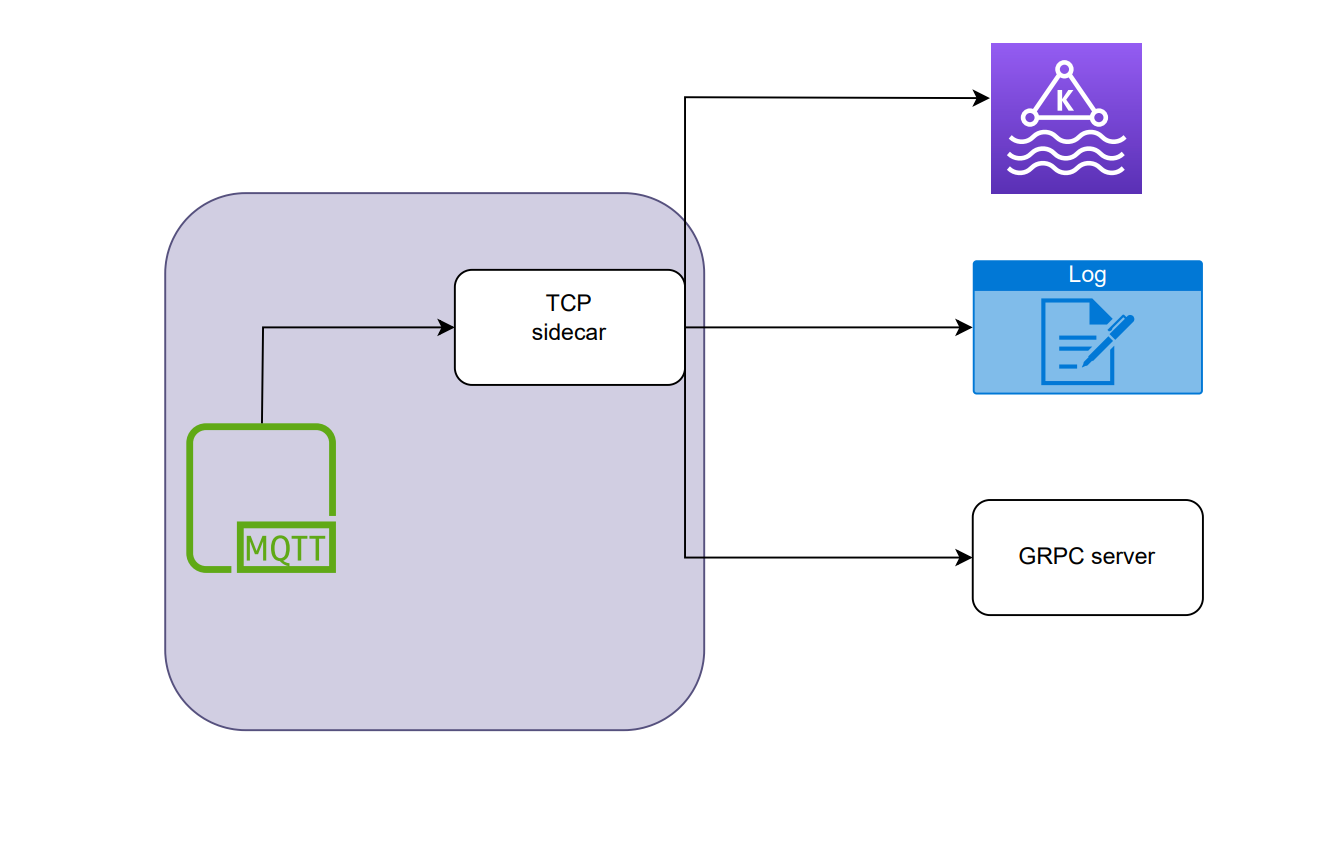

- Faster way to relay events. VerneMQ provides HTTP webhooks to relay various kinds of events to services that need them. But as you may know, HTTP is slow! Although this is putting too simpilistically.

When using http webhooks, we noticed that the message rps did not go beyond 1.3k rps and p99 of http call was above 1s. We moved hooks to asynchronous worker jobs, this reduced the p99 to under 3ms, and we reached a peak of 5k rps.

We suspected that HTTP/1.1 is the issue which resulted in a new connection for every request utilising more VM resources. So, we concluded that http/1.1 is actually the bottleneck, and we had to implement a better webhook mechanism to relay events.

Enhancements

To address the issues mentioned in the previous section, we decided to add the following changes over VerneMQ.

- Provide an option to disable disk based persistence.

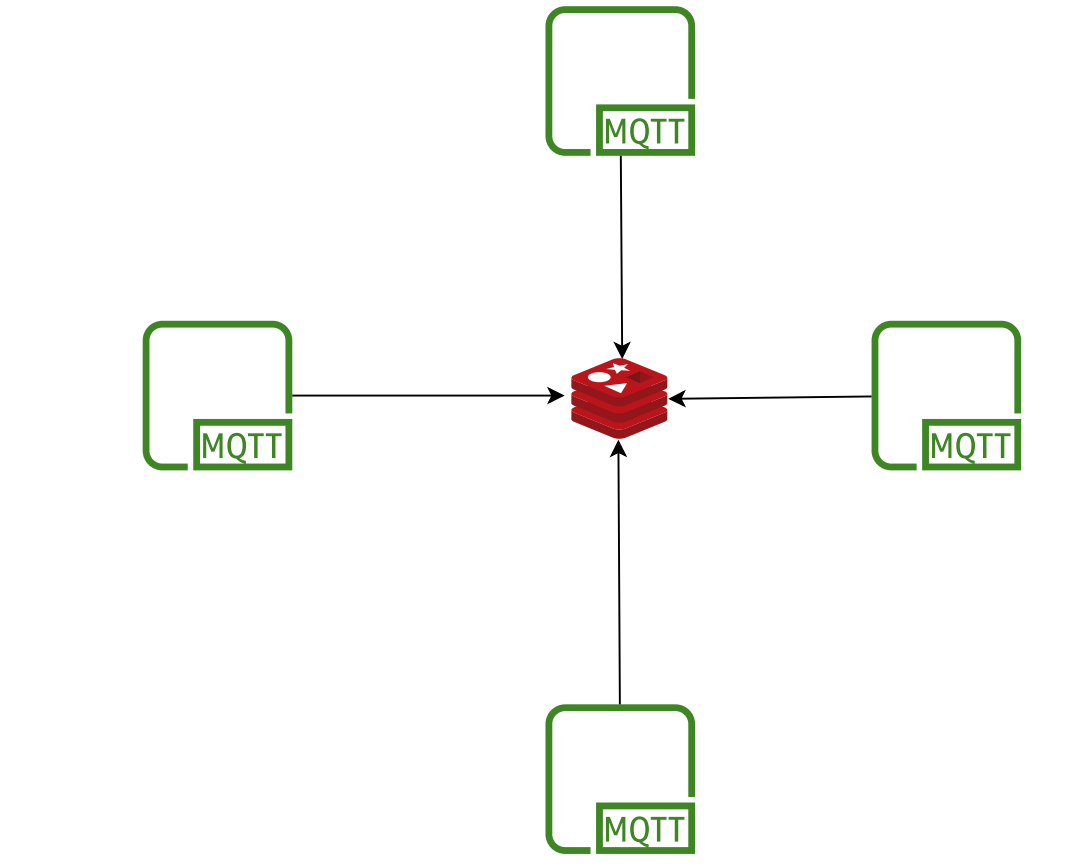

- Build a Redis based subscription store to bypass leaderless replication. This would help us in avoiding the pitfalls of the synchronisation loop.

3. Build a JWT based authentication plugin.

4. Enhance the ability of the ACL plugin.

5. Use TCP instead of HTTP to propagate events. Now we could use a sidecar or a TCP-based server to handle these events.

Open sourcing our changes

We’re excited to announce that we have open-sourced these features in our fork of the VerneMQ project:

You can learn more about these here.

Watch this space for more updates on challenges we face and how we solve them.

Find more stories from our vault, here.

Check out open job positions by clicking below: